Tamanoir in the TEMIC project

Table of Contents

- The TEMIC project

- The context

- Tamanoir in the TEMIC project

- The active services designed for TEMIC

- How to run these active services

- Current limitations

The TEMIC project

TEMIC stands for TEleMaintenance Industrielle Cooperative which means Industrial Co-operative Remote Maintenance. TEMIC is a French national project with several academic (LIFC, INRIA, GRTC) and industrial (SWI) partners.

TEMIC is funded by the French ministry of industry, the French ministry of education and research and the French innovation agency (OSÉO anvar).

Temic homepage: http://temic.free.fr

The context

TEMIC proposes a hardware and software platform of collaborative remote

maintenance : maintenance staff may work remotely and in collaboration

with other experts. The integration of innovative solutions in terms of

networks and mobility leads to competitive maintenance solutions, based on

the connection (nearby or remote) of multimedia computer data with

adapted human skills.

The TEMIC platform integrates various technologies:

- Networks: they may be wire (LAN, WAN) or wireless (GPRS, WiFi, Bluetooth). They present different characteristics (rate...).

- Terminals: PC, laptop, PDA, mobile phone. Resources, display capacity, communication protocol, are different.

- Multimedia applications: VOD, videoconference, file download. They run with specific protocols and video/audio formats.

To respond to these various constraints, active services have to adapt and optimize the content of streams passing through the active network node. Multimedia data streams adaptation is performed dynamically in order to improve industrial maintenance solutions. The challenge is to provide an architecture running in a client/server environment, but involving no modification on the applications installed on the end-machines like web servers, video players,...

Tamanoir in the TEMIC project

For the Temic project, our team has worked on the design and adaptation of an industrial autonomic network node, which is derived from the Tamanoir environment. This Industrial Autonomic Network Node is designed to be deployed on limited resources based network boxes, and so to be integrated into industrial platforms.

We developed and tested active adaptation network services, specially written for the Tamanoir Execution Environment in Java. Active services applying on multimedia streams crossing the network node may realise data compression, format transcoding, frame resizing... This kind of adaptation contributes to the saving of network bandwidth (by decreasing the output data rate) and to the reduction of the resources used on the client terminal playing the multimedia data (by reducing the framerate and the frame size). The adaptation is thereby transparent for the applications.

We present two industrial maintenance scenarios on which we base our experimentations. These scenarios were planned by the TEMIC project team to be used by a company through a maintenance contract on a restricted industrial area.

Scenario 1: "Gathering and Survey"

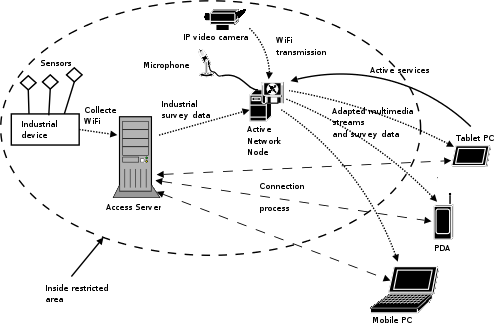

Fig.1: Gathering and survey scenario architecture.

Fig.1: Gathering and survey scenario architecture.

This scenario (Fig. 1) adds multimedia sensors (microphone + video camera) to industrial classical sensors (temperature sensor, contact sensor ...). In the Temic project, the video camera is directed towards a strategic area inside a pump house whereas the microphone is used to locate unusual engines sounds. They can be combined together, and in this case the data flow will contain both audio and video streams. This scenario remains opened to all kinds of survey data. New sensors providing several data streams may be added.

Terminals may be laptop, PDA, in fact every mobile device able to communicate with at least the HTTP protocol and to play multimedia data. The access server is used both to grant access to the system from mobile clients and to transmit survey data coming from industrials sensors to the active network node. The active network node hosts active adaptation network services which analyse clients requests and transmit them the adapted data. All collected data (provided by sensors) are managed by the active network node. It means that data are stored, deleted if not necessary anymore or transmitted to users who ask for them.

In this scenario, all communications occur on wireless network, and the end users are located outside the restricted area. End users (maintenance staff), if they are authorized to connect to the system, have the possibility to get data stored on the active network node (still images for instance) and to visualise in real-time the video/audio streams coming from the video camera/micro.

Active adaptation network services deployed for this scenario provide the following tasks :

- adaptation of transmited multimedia streams, on the fly, to mobile devices. Streams are adapted to network conditions, processing and display capacities of the receiving terminal with modifications of frame size, quality factor, encoding format. Audio and video streams are transmitted with a streaming protocol like RTP, which stands upon UDP.

- adaptation of some data, such as still images, with the same modification as above. The adapted data are then transmitted, possibly contained into a file, to mobile devices. The difference with the case presented above is that data are not sent with a streaming protocol. They may be contained into a file (a jpeg image) and transmitted with HTTP. Players on mobile devices are not the same for both cases.

- storage on the active network node of some sensors data and multimedia data, and management of the storage unit by regular cleanings.

Scenario 2: "Analysis and Collaboration"

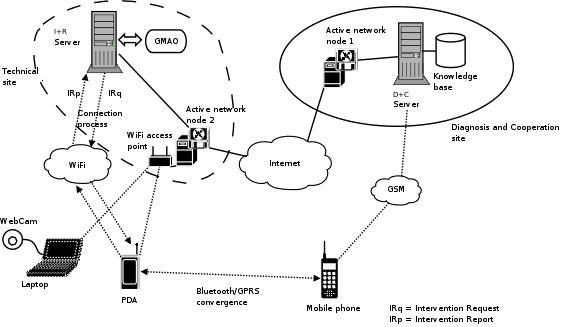

Fig.2: Analysis and collaboration scenario architecture.

Fig.2: Analysis and collaboration scenario architecture.

This second scenario (see fig. 2) aims at creating and using a knowledge database on the cooperation server (D+C), which thusgets more and more usefull information about the industrial equipments to maintain. So this makes possible the download of information (video sequences, images) showing a repair for example onto the mobile device before a technical intervention, and the coming back of media data onto the server after the intervention so that it can be used later for teaching staff or for a new technical repair.

The server D+C (Diagnosis and Cooperation) hosts the knowledge database. This is the technical support for all the platform users. The server I+R (Intervention and Reporting) is directly linked with the "GMAO" : it provides the reports exchange with mobile devices (the intervention request and the intervention report). Two active network nodes are involved in this scenario. The first active network node hosts active adpatation services to adapt data exchanged between mobile devices and the server D+C. The second active network node also acts as a service repository for the other active nodes on the platform and it contains up to date versions of all deployed services. Moreover, it includes a Web interface called Mapcenter, which is a toolset able to give the list of deployed active nodes with their working state and their hosted services.

A technical intervention is planned into two parts :

- The technician gets and reads the intervention request, which informs him about his mission. This worksheet may contain the list of technical equipments to takeout, references on existing data in the knowledge database . In that event it may be a web page to read, a file to download, a movie to view. The technician uses his browser to get this information transmitted by the server D+C. Downloaded data may be used during the intervention to assist the technician.

- During his intervention, the technician takes photos and films some video sequences, if needed. At the end of his intervention, the technician writes his intervention report, and transmits it to the server I+R. Then, if he has collected usefull information like images or videos, he transmits these data to the server D+C. This information will improve the knowledge base.

During these two steps, multimedia data exchanged between the server D+C and the mobile devices pass through the active network node 1. Communications occur on wire and wireless networks, depending on the technician place when he access data and also on his terminal itself (he may choose to connect his mobile PC to a wire network at his office for example).

Active adaptation network services deployed for this scenario provide the following tasks:

- adaptation of multimedia data streams (movies or images) transmitted from the server D+C to mobile devices, connected with a wire or wireless network. It implies modifications of frame size, quality factor, encoding format and transport protocol.

- adaptation and improvement of data transmitted by mobile devices to the D+C server (by adding the date and the technician name for example).

The active services designed for TEMIC

Actually three active services have been developed for this project. They are designed to adapt multimedia data.

Implementation

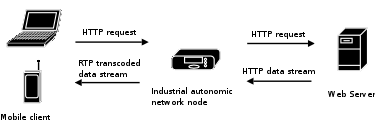

Our framework is based on a client server model. The client plays a multimedia stream, sent by a web server. We use usual softwares on the server and on the client : an Apache web server and various players like VLC, PocketTV, Totem... The media adaptation is performed by the active service while data are transmitted from the server to the client.

Our active services use the Java Media Framework (JMF), version 2.1.1 for the media adaptation. The JMF API enables the display, capture, encoding, decoding and streaming of multimedia data in Java technology-based applications. JMF also provides an API for the RTP (Real Time Protocol) transport protocol, which is adapted for transmission of real time media. JMF includes a number of codecs to process data streams.

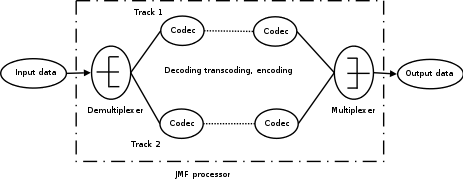

The JMF architecture contains a process unit called a JMF processor.

Fig.3: JMF processor architecture.

Fig.3: JMF processor architecture.

The processing performed by a Processor is split into four main stages (fig. 3):

- Demultiplexing - an interleaved media stream is first demultiplexed into separate tracks of data streams that can be processed individually (audio stream and video stream).

- Data transcoding - each track of data can be transcoded from one format to another. If the individual tracks are compressed, they are first decoded.

- Multiplexing - the separate tracks can be multiplexed to form an interleaved stream of a particular container content type.

- Rendering - the media is presented to the user.

The active service initiates the processor by giving him the data source, the output media type, the video frame size, the audio sample rate and the output protocol.

Our framework support MJPEG and MPEG-1 as input video formats and MPEG layer II (mp2), PCM as input audio formats. Data are transcoded into the H263 format for the video track and into the LINEAR PCM for the audio track. These formats have been chosen among the JMF supported media formats, and also because H263 format provides a medium quality while needing only medium CPU requirements and low bandwidth requirements, whereas PCM format provides a high quality and needs low CPU requirements and high bandwidth requirements.

The VideoAdaptS service

The VideoAdaptS service is used for the transcoding (if necessary) and transmission of a media file (containing audio and video or only video frames) to the client (the player).

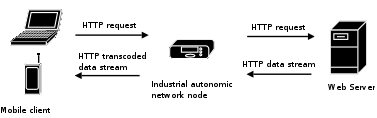

The client connects to the active network node by giving him the URL of the multimedia file he wants to download. The URL also contains the active service to apply to the resulting data stream. This HTTP request is analysed by the active service in order to extract the client description. The active service then checks if it is necessary to transcode the media format to a less consuming resources media format. If being the case, it gives parameters to the JMF processor which will process data and creates a file, at the specified format, containing the transcoded data. This resulting file is then sent to the client. If not the case, the request is simply transmitted to the server and then the response (containing the requested data) are sent back to the client. In both cases, data exchanges between the active service on the network node and the web server take place with the HTTP protocol, and the resulting file is transmitted to the client with the HTTP protocol.

Fig.4: The VideoAdaptS service mechanism.

Fig.4: The VideoAdaptS service mechanism.

The VideoStreamS service

The VideoStreamS service is used for the transcoding (if needed) and the real time transmission of a video sequence (coming from a source file or from a capture device) with the RTP protocol.

The mechanism is similar to the one explained for the previous service. The main difference is in the output protocol. Instead of sending an entire file over HTTP, this service streams video content over RTP, so that it enables immediate playback by the client. The user may choose between a video source file or a video sequence coming from a capture device. In this last case, the video camera must be connected directly to the active network node. This is well adapted to the first scenario "Gathering and Survey".

Fig.5: The VideoStreamS service mechanism.

Fig.5: The VideoStreamS service mechanism.

This service only transmits the video track, because in the case of an RTP transmission, each media stream is transmitted in a separate RTP session, and RTP players listen only for a single session.

Using cases

Both services are used in different cases : VideoAdaptS is preferably used when the user wants to download the file onto his terminal device (so he can read it again in the good format for his terminal capacities with no additional network request). Also it will be used in case no RTP player is available on the terminal device. The service VideoStreamS is better used for a real-time view, for instance when the video source is a video camera.

The VideoLiveS service

The VideoLiveS service is also used for the transcoding (if needed) and the real time transmission of a video sequence coming from a capture device with the RTP protocol. Unlike the VideoStreamS service, this service is able to transmit to the client in real time a video sequence captured on an other computer and not only locally. Thus this is more adapted to a video conference scenario.

How to run these active services

Active services described above use the JMF framework. For information they have been compiled and run with : J2SE v1.4.2 and JMF 2.1.1 . The JMF includes an application, called JMStudio that you can use as a player.

The VideoAdaptS service

It works on the port number 9090.

Description : within a HTTP request, the client requests a file containing video and audio (even if we transmit audio, we keep more interest for video). The service parses the request to find if this client needs an adaptation of data, (at that time the requested format and size are given directly in the Url, but they can be fixed according to the type of the client with some minor modifications in the software sources by the test on the User-Agent). If needed, the service uses the JMF to transcode audio/video to respectively LINEAR PCM/H263, else the file is transmitted as is.

How to use this service : Open a video player on the client

and choose to open an URL (e.g

http://153.69.1.10:9090/H263/CIF/140.77.13.75/~mchaudie/AF1-Wingtip-Vortices-mjpeg.avi

where :

153.69.1.10 = the IP address on which the tamanoir node is listening;

9090 = the service itself;

H263 = the new desired format (it may be H263 or JPEG);

CIF = the new size (it may be CIF=352x288 ,QCIF=176x144 or SQCIF=128x96; if it

is empty then the size is unchanged);

140.77.13.75 = the IP address of the server containing the file to download;

~mchaudie/AF1-Wingtip-Vortices-mjpeg.avi = the path of the requested file ).

Be aware that the file needs to be entirely transcoded before it can be transmitted to client. So choose a short sequence (or you can put start and end times in the source) because, when having no response, the player will close the connection more or less rapidly (it depends on players).

The VideoStreamS service

It works on the port number 8888.

Description : when the service receives the request from the client, it parses the request to know the format and the size requested by the client. Then it sends streams in RTP/UDP with a video format compatible with the input data (so it can be H263/RTP, MPEG/RTP, JPEG/RTP), the destination port is directly written in the source code and is the same for all streams at this time (fixed at 6200).

How to use this service : to choose the file or the video stream

that he wants to receive, the client has also to use a Url. In practise, this Url

may be found on a web page available on the server for instance (and the user selects

a data source) or it may be contained within a file sent to the user.

Here is some examples of such url :

http://153.69.1.10:8888/H263/QCIF/140.77.13.75/~mchaudie/AF1.avi

http://153.69.1.10:8888/H263/QCIF/140.77.13.75/videodevice

The Url is constructed as following:

153.69.1.10 = the IP address on which the tamanoir node is listening;

8888 = the service itself;

H263 = the new desired format (it may be H263 or JPEG);

QCIF = the new size (it may be CIF=352x288 ,QCIF=176x144 or SQCIF=128x96; if it

is empty then the size is unchanged);

140.77.13.75 = the IP address of the server containing the file to download;

~mchaudie/AF1.avi = the path of the requested file, or the keyword videodevice

to view the stream coming from the video camera (in this case the server address

is unused).

An example of a web page containing urls is given on the CVS (media_list.html).

Then Open a RTP session on the client player to listen for RTP streams (for JMStudio you must give the source IP address and the destination port of the RTP stream (6200 at that time); for VLC you must only give the destination port). Depending on the client: for some players, you must run the RTP session before the service send RTP streams (VLC), for some other players (JMStudio) it doesn't matter.

The VideoLiveS service

It works on the port number 9191.

Description : when the service receives the request from the client, it parses the request to know the address of the machine sending RGB video frames (called the server machine). So it can receive RGB frames coming from the video capture device. It uses JMF to adapt these frames and then send the resulting streaming to the client. At this time, video frames are transcoded into H263/RTP and in size 352x288. On the client side, the player receives up to 7 fps. The communication way that the service uses to receive RGB frames depends strongly on the application used on the server machine. The application has to get RGB video frames from the camera device and to send them to the service. For our tests, we designed a simple Java application, called VideoFrameGrabber. Sources and class files for this application are available on the CVS. When it is run, this application opens the video device, runs a first thread which gets RGB frames and then listens for incoming TCP connections. When the service is activated, it opens a TCP socket and try to connect to the sender application. When the application is informed of the connection by the service, it runs a second thread which sends captured RGB frames on the TCP connection.

How to use this service : to activate this service, the client has also to use

a Url. In practise, this Url

may be written on a web page available on the server for instance or it may be

contained within a file sent to the user.

Here is an example of such url :

http://10.0.1.2:9191/10.0.1.5/videolive

The Url is constructed as following:

10.0.1.2 = the IP address on which the tamanoir node is listening;

9191 = the service itself;

10.0.1.5 = the IP address of the machine running the application capturing video frames;

videolive = a keyword

Open a RTP session with JMStudio on the client to listen for RTP streams (for JMStudio you must give the source IP address and the destination port of the RTP stream (6200 at that time). When the server application sending RGB frames is stopped, it closes the TCP connection and so the service thread is stopped on the active node.

Current limitations

- It seems that there are some incompatibilities between the RTP streams provided by the JMF and the RTP that VLC is able to read.

- A lot of limitations in the choice of transcoding formats, frame size, frame rate come from the JMF.